DeepSeek-V3

A state-of-the-art Mixture-of-Experts language model with 671B total parameters and 37B activated per token, delivering competitive performance with leading closed-source models.

Educational guide to AI models for real-world implementation. Learn about language models, image models, and multimodal systems with practical specifications, cost analysis, and deployment insights for business impact.

A state-of-the-art Mixture-of-Experts language model with 671B total parameters and 37B activated per token, delivering competitive performance with leading closed-source models.

OpenAI's open-source GPT model series featuring configurable reasoning effort, full chain-of-thought capabilities, and agentic functions including web browsing and Python execution.

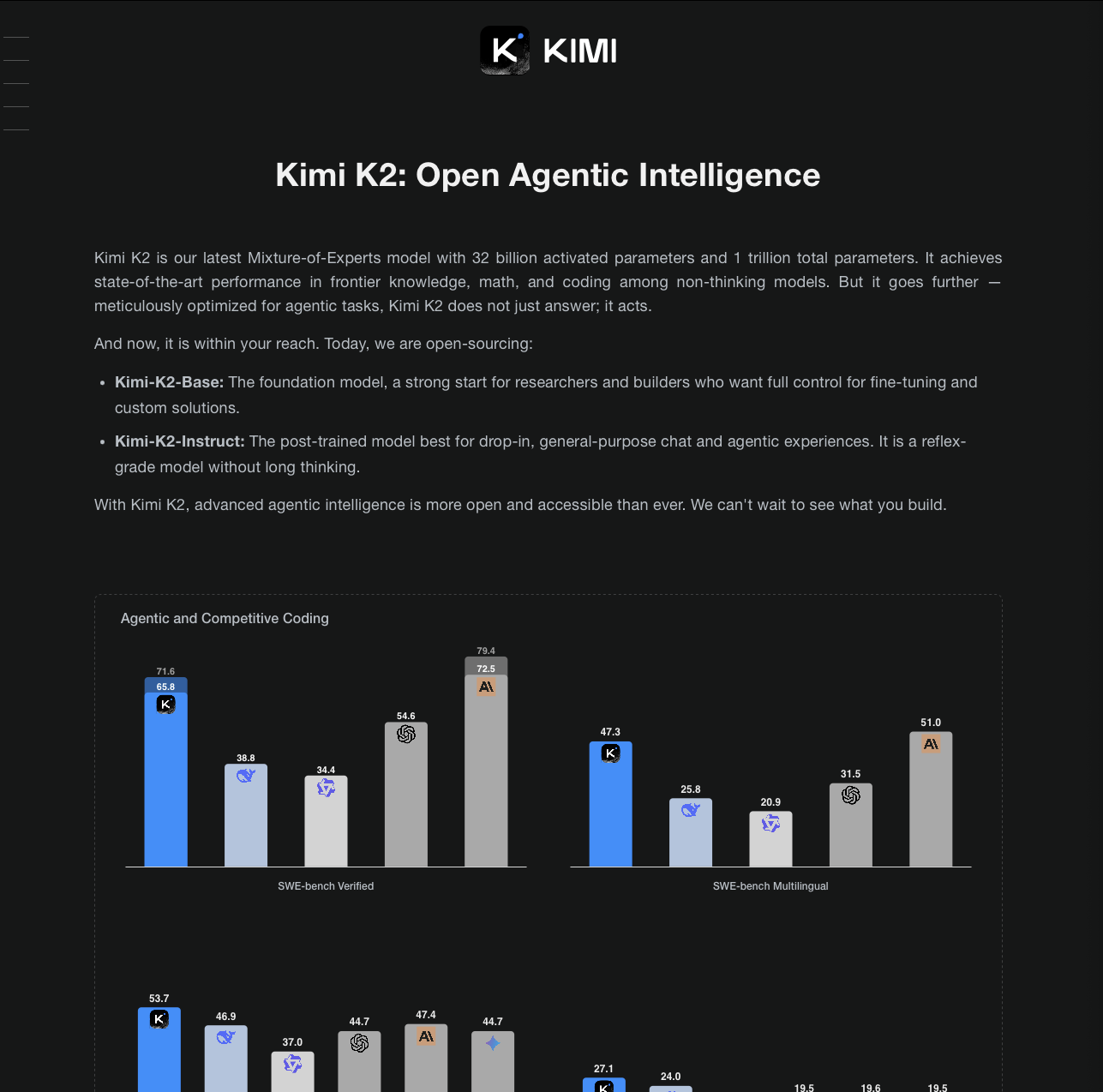

Moonshot AI's trillion-parameter mixture-of-experts model designed for open agentic intelligence, featuring advanced reasoning, tool use, and autonomous problem-solving capabilities.

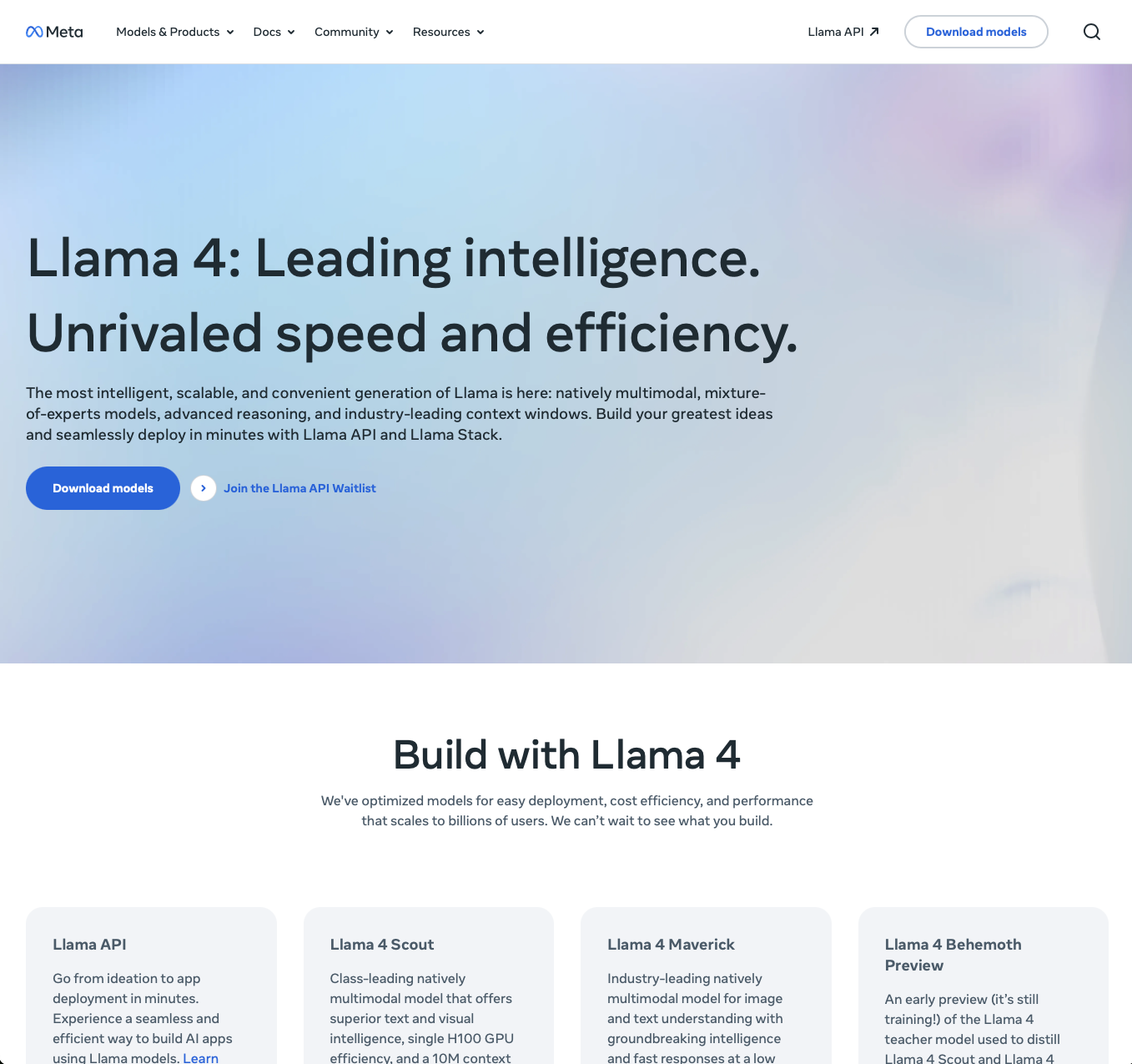

Meta's most advanced generation of Llama models featuring natively multimodal capabilities, mixture-of-experts architecture, advanced reasoning, and industry-leading context windows.