DeepInfra

AI InfrastructureAI infrastructure platform providing fast ML inference and model deployment through simple APIs, democratizing access to top AI models with competitive GPU pricing.

DeepInfra company profile

Overview

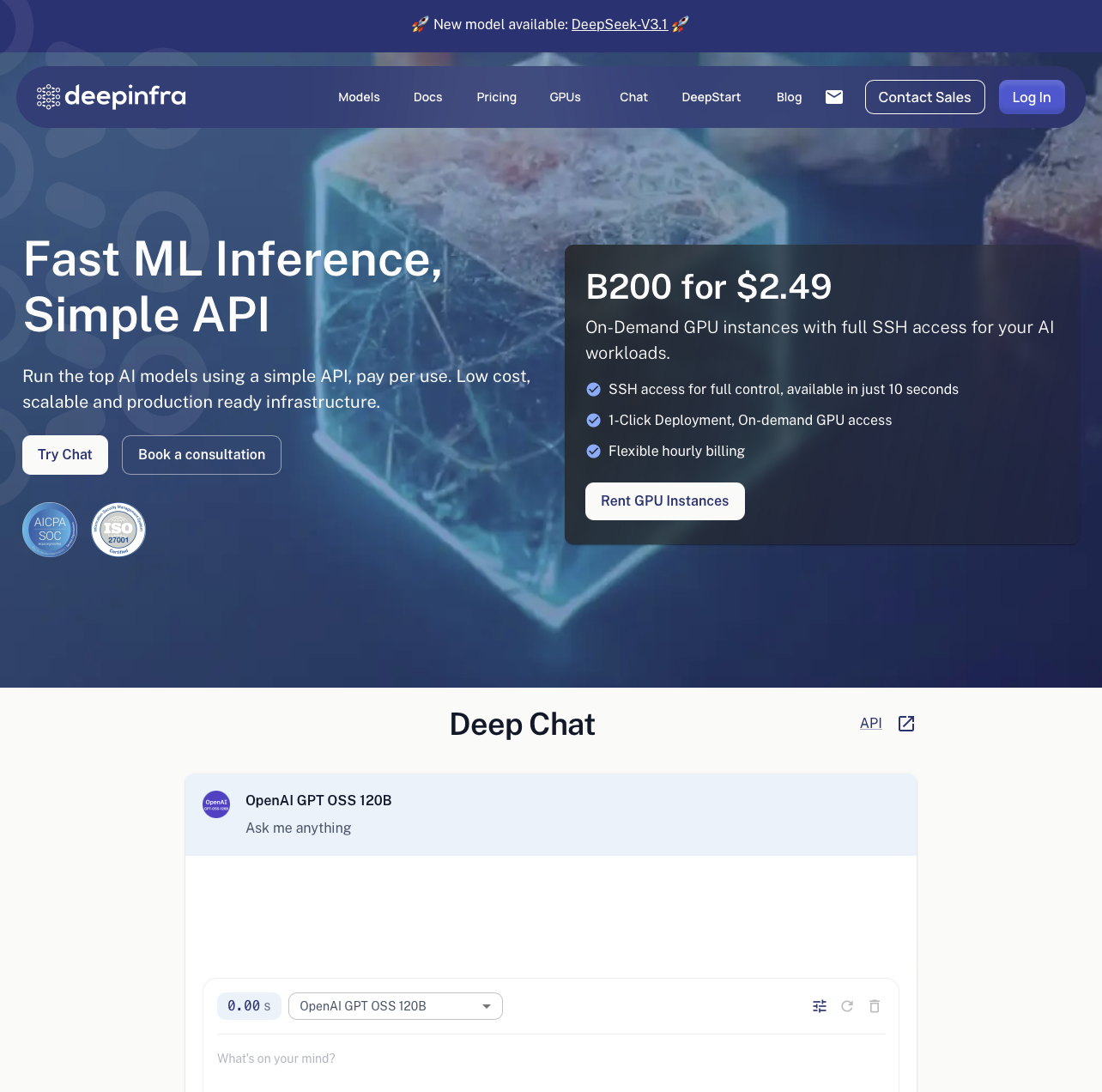

DeepInfra is revolutionizing AI infrastructure by democratizing access to top AI models through fast, affordable ML inference and serverless deployment. Founded in 2022 in Palo Alto, California, the company provides a simple API that abstracts the complexity of running state-of-the-art AI models, enabling developers and organizations to integrate powerful AI capabilities without managing infrastructure.

With their mission to “democratize AI access,” DeepInfra offers competitive pricing that significantly undercuts major cloud providers, making advanced AI capabilities accessible to startups, developers, and enterprises alike. The platform supports the latest AI models including Llama, GPT variants, and cutting-edge models like Moonshot AI’s Kimi K2, all accessible through a unified API interface.

Fast ML Inference

DeepInfra’s core strength lies in providing sub-second inference times for AI models through optimized infrastructure and intelligent caching. The platform eliminates traditional barriers to AI deployment by offering serverless architecture that automatically scales based on demand, ensuring applications can handle traffic spikes without manual intervention.

The infrastructure is designed for developers who need reliable, fast AI inference without the complexity of managing GPU clusters, model optimization, or scaling infrastructure. This developer-first approach has made DeepInfra a popular choice for startups and established companies building AI-powered applications.

Technology Platform

Infrastructure Architecture

DeepInfra’s platform is built on a foundation of optimized GPU infrastructure specifically designed for ML workloads. The architecture leverages intelligent resource allocation, automatic scaling, and performance optimization to deliver consistent sub-second response times across diverse AI models.

| Component | Traditional Cloud | DeepInfra Platform | Business Impact |

|---|---|---|---|

| GPU Access | $5-10/hr for comparable GPUs | $1.99/hr for B200 GPUs | 80% cost reduction |

| Model Deployment | Complex setup and maintenance | One-click serverless deployment | Hours to minutes deployment |

| Scaling | Manual configuration required | Automatic elastic scaling | Zero downtime growth |

| API Integration | Multiple vendor APIs | Unified API for all models | Simplified development |

| Performance | Variable based on load | Consistent sub-second inference | Reliable user experience |

Developer Experience

The platform prioritizes developer productivity through comprehensive documentation, intuitive APIs, and minimal setup requirements. Integration requires just a few lines of code, enabling teams to prototype and deploy AI features rapidly without infrastructure expertise.

Key developer benefits include:

- Simple REST API with extensive documentation

- Python SDK for seamless integration

- Comprehensive tool call and context support

- Real-time performance monitoring

- Automatic error handling and retry logic

Core Products

DeepInfra API

The flagship API service provides unified access to top AI models with fast inference and competitive pricing. Developers can integrate state-of-the-art AI capabilities into applications with minimal setup, supporting various model types from text generation to specialized AI tasks.

Supported Models:

- Llama family (including latest versions)

- GPT variants and alternatives

- Specialized models like Kimi K2

- Custom deployed models

- Vision and multimodal models

GPU Infrastructure

DeepInfra provides direct access to high-performance GPUs at industry-leading prices. The infrastructure is optimized for both training and inference workloads, with flexible pricing models accommodating different usage patterns.

Infrastructure Offerings:

- NVIDIA B200 GPUs at $1.99/hr

- H100 and A100 GPU availability

- Optimized for ML workloads

- No long-term commitments

- Automatic resource optimization

Model Deployment Services

The platform enables deployment of custom models on serverless infrastructure with automatic scaling capabilities. Organizations can deploy proprietary models while leveraging DeepInfra’s optimized infrastructure and API framework.

Deployment Features:

- Serverless model hosting

- Support for PyTorch, TensorFlow, and other frameworks

- Custom deployment pipelines

- Performance monitoring tools

- A/B testing capabilities

Competitive Advantages

Cost Leadership

DeepInfra’s pricing strategy represents a paradigm shift in AI infrastructure economics. By offering B200 GPUs at $1.99/hr compared to $5-10/hr from major cloud providers, the company enables organizations to run AI workloads at a fraction of traditional costs.

This cost advantage extends beyond raw GPU pricing to include:

- No minimum commitments or setup fees

- Pay-per-use billing with per-second granularity

- Free tier for experimentation

- Volume discounts for enterprise customers

Performance Excellence

The platform’s infrastructure is purpose-built for AI workloads, delivering consistent performance that exceeds general-purpose cloud offerings:

- Sub-second inference for most models

- 99.9% uptime SLA

- Global edge locations for low latency

- Intelligent caching and optimization

Model Ecosystem

DeepInfra maintains one of the most comprehensive model ecosystems available, ensuring developers have access to cutting-edge AI capabilities:

- Latest open-source models added within days of release

- Proprietary model partnerships

- Custom model support

- Regular performance updates and optimizations

Market Impact

Democratizing AI Access

DeepInfra is breaking down traditional barriers to AI adoption by making advanced capabilities accessible to organizations of all sizes. Their approach enables:

Startups and Small Businesses:

- Access to enterprise-grade AI without enterprise budgets

- Ability to compete with larger competitors on AI capabilities

- Rapid experimentation and iteration

Enterprise Organizations:

- Significant cost reduction on AI infrastructure

- Flexibility to experiment with multiple models

- Reduced vendor lock-in

Research and Academia:

- Affordable access to cutting-edge models

- Resources for large-scale experiments

- Collaboration opportunities

Industry Transformation

The company’s impact extends beyond individual customers to drive broader industry change:

- Forcing major cloud providers to reconsider AI pricing

- Accelerating AI adoption across industries

- Enabling new AI-powered business models

- Supporting the open-source AI ecosystem

Future Vision

Under CEO Nikola Borisov’s leadership, DeepInfra continues to push boundaries in making AI infrastructure more accessible. The company’s roadmap includes:

- Expanding model offerings with latest releases

- Further infrastructure optimization

- Enhanced developer tools and integrations

- Global expansion of edge locations

- Advanced monitoring and optimization capabilities

The combination of competitive pricing, superior performance, and developer-friendly design positions DeepInfra as a key enabler of the AI revolution, ensuring that advanced AI capabilities are no longer the exclusive domain of tech giants but available to any organization with innovative ideas.